LSTM-Transformer Model: A New Frontier in Mine Water Inflow Prediction

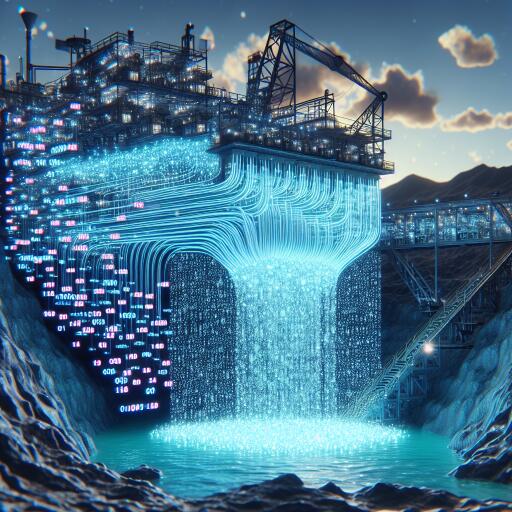

In a groundbreaking study published in Scientific Reports, an innovative predictive model dubbed the LSTM-Transformer has been introduced, marking a significant leap in the precision of forecasts, particularly for the challenging and nonlinear mine water inflow data. This hybrid model melds the Long Short-Term Memory (LSTM) network with the Transformer architecture, aiming to harness their combined strengths for superior accuracy in predictions swayed by a gamut of external influences.

Understanding the Complexity of Mine Water Inflow

Mine water inflow is notoriously intricate, affected by geological formations, weather patterns, subterranean water movement, and mining operations. The inherent idiosyncrasies and time-related correlations make predicting such inflows a daunting task. Traditional models often fall short in grappling with these complexities, prompting a search for more capable alternatives.

Recognizing the critical nature of preprocessing, the researchers emphasize the importance of smoothness testing to sift through raw data, eliminating noise and outliers. This step fortifies the model’s reliability and focus, delivering insights into underlying patterns instead of anomalies. The study also revisits present prediction models, pointing out the shortcomings of sole reliance on deep learning approaches, like Convolutional Neural Networks (CNN) and LSTM, which lack the comprehensive self-attention mechanisms ingrained in Transformers.

The Advent of the LSTM-Transformer Model

Employing a robust methodology, the study ventured into developing the LSTM-Transformer model using data from the Baotailong mine in China’s Heilongjiang Province. The resulting dataset, brimming with nonlinearity and temporal dependencies, underwent rigorous preprocessing to ensure noise diminishment and outlier filtration.

In crafting the hybrid model, the researchers integrated the LSTM’s prowess in capturing long-term dependencies with the Transformer’s self-attention mechanism. This fusion not only processed input sequences in parallel but also excelled in discerning complex data patterns over time. Hyperparameter tuning stood out as a pivotal element, deploying both random search and Bayesian optimization to hone the model’s performance.

Superior Performance and Implications

Results were nothing short of impressive, with the LSTM-Transformer model overshadowing its peers in predictive accuracy for mine water inflow. The fusion model’s adeptness at leveraging the self-attention mechanism enabled it to unravel far-reaching dependencies and intricate patterns, offering a novel tool for the mining industry to navigate the intricacies of water management efficiently and safely.

While acknowledging the challenges, including the significant computational demands and the complexity surrounding hyperparameter tuning, the study underscores the undeniable benefits of enhanced predictive accuracy. Despite these hurdles, the LSTM-Transformer model emerges as a vital asset for mine water inflow forecasting.

Charting the Future

The paper concludes by heralding the LSTM-Transformer model as a pivotal development in mining operations, tackling the intricate task of predicting water inflow with unprecedented accuracy. It opens avenues for further exploration in applicable domains and model refinement. This research not only enriches the field of predictive modeling but also presents actionable solutions addressing real-world challenges faced by the mining sector.

Source:

Shi, J., Wang, S., Qu, P. et al. Time series prediction model using LSTM-Transformer neural network for mine water inflow. Sci Rep 14, 18284 (2024). DOI: 10.1038/s41598-024-69418-z, https://www.nature.com/articles/s41598-024-69418-z