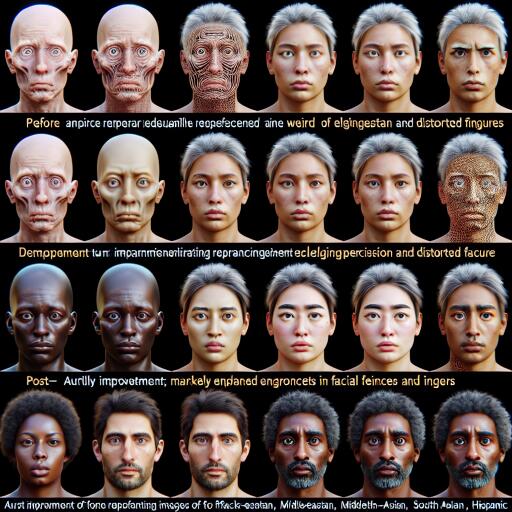

Say goodbye to AI’s weird image errors: New method fixes distorted fingers and faces

In the rapidly evolving world of generative artificial intelligence (AI), platforms like Stable Diffusion, Midjourney, and DALL-E have left audiences in awe with their ability to craft images that seem pulled from the depths of human imagination. Yet, their Achilles’ heel has been a nagging propensity for creating visuals that are, at times, less than perfect. One of the most noticeable issues includes the generation of images with non-square dimensions, which can lead to bizarre distortions such as extra fingers on humans or oddly stretched objects.

Enter a groundbreaking solution from a team of computer scientists at Rice University, promising to make these peculiar visual flaws a thing of the past. They’ve introduced a technique known as ElasticDiffusion, a method that significantly improves the quality of AI-generated images across various aspect ratios. This innovation was unveiled at the prestigious Institute of Electrical and Electronics Engineers (IEEE) 2024 Conference on Computer Vision and Pattern Recognition (CVPR) in Seattle, offering a glimmer of hope for a seamless digital visual future.

At the core of traditional image generation models like Stable Diffusion is a process known as diffusion. These models undergo training that involves introducing random noise to images, then meticulously learning to reverse this noise to craft new visuals. The hitch? These processes have largely been confined to square images, leading to overfitting when encountering aspect ratios out of their trained scope, such as the widescreen formats prevalent in today’s monitors and smart devices.

The Rice University team’s ElasticDiffusion strategy aims to tackle this limitation head-on. This method cleverly separates an image’s global and local signals – the former relating to the general outline of the image while the latter deals with specific details like textures and shapes. Traditional models amalgamate these signals, leading to distortions when generating non-square images. ElasticDiffusion enhances the process by organizing these signals in a sequential manner: it begins with the global outline before precisely placing local details, avoiding the common pitfalls of repetition and distortion.

Though ElasticDiffusion presents a leap forward in AI image generation, achieving perfection costs time. The method currently operates between 6 to 9 times slower than its contemporaries. But the team, spearheaded by Moayed Haji Ali, is on a mission to refine this. Ali expresses optimism about aligning ElasticDiffusion’s speed with that of present-day models without sacrificing its newfound accuracy and versatility in handling various aspect ratios.

The promise of ElasticDiffusion extends far beyond just eliminating visual errors. Its ability to faithfully produce images across different dimensions opens up new vistas in digital artistry, video production, and virtual reality – domains where visual consistency and quality are paramount. As this technology evolves, we stand on the brink of an era where AI-generated images are indistinguishable from those captured through a lens or painted by a brush, all the while opening the door to uncharted realms of digital creativity.