Artists Rally Against AI’s Unconsented Data Harvesting

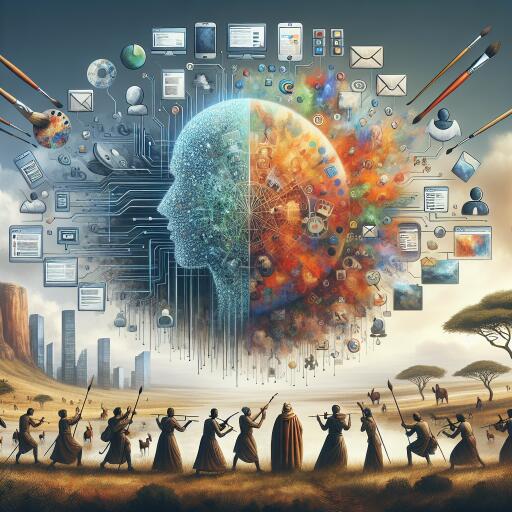

In an era where generative AI creations are becoming increasingly commonplace, a growing concern among artists and content creators has emerged. The crux of their worry lies in the potential jeopardy of their intellectual property (IP), as many of these AI tools are developed by indiscriminately mining the internet for data and images, often without explicit permission from the original creators.

In an innovative pushback against this trend, some artists have begun to employ a tactic known as data poisoning, aiming to protect their work from being scraped by AI algorithms. This intriguing countermeasure was a focal point in a recent episode of The Conversation Weekly podcast, which featured insights from Dan Angus, a professor of digital communication at the Queensland University of Technology in Australia, whose expertise lies in AI, automation, and their societal impacts.

Understanding Data Poisoning

Data poisoning operates under a simple yet clever principle: subtly alter the digital content in ways that are imperceptible to humans but could significantly mislead or derail an AI’s learning process. Angus illustrated this concept with a hypothetical scenario where an AI, designed to generate images, produces wildly inaccurate or bizarre results due to poisoned inputs. For instance, in generating an image of a person riding a space bull on Mars in a Van Gogh style, the presence of poisoned data might replace the intended bull with a horse or completely alter the perceived environment.

An artist might implement this strategy by embedding an invisible pixel within their digital image—a minor alteration for the human eye, but one that could radically confuse an AI algorithm. “It doesn’t take a lot of that to enter a system to start to cause havoc,” Angus stated.

January 2024 saw the introduction of Nightshade, a data poisoning tool devised by the University of Chicago, which garnered significant attention with 250,000 downloads within its first week. The availability of similar tools for audio and video content highlights a growing toolkit for creators seeking to safeguard their creations.

The Ethical Dilemma in Computer Science

While data poisoning presents a novel form of resistance against unwanted AI scraping, Angus expresses doubt about its potency to substantially disrupt major AI firms due to its scope. Nonetheless, he voices a deeper concern regarding a prevalent attitude within computer science—that the pursuit of technological advancements often overshadows ethical considerations, particularly around IP rights.

“It breeds a certain set of attitudes around data, which is that data found is data that is yours. That if you can find it online, if you can download it, it’s fair game and you can use it for training an algorithm, and that’s just fine because the ends usually justify the means,” he elucidates. This mindset, according to Angus, signifies a profound cultural issue within the field that could pave the way for further disregard for creators’ rights and ethical missteps in AI development.

Looking Ahead

The phenomenon of data poisoning highlights a growing unease regarding the balance between technological innovation and the protection of intellectual property. As creators increasingly deploy such measures, the dialogue around the ethical use of data in AI training takes on new urgency. The situation calls for a paradigm shift within the computer science community, demanding a greater emphasis on ethical considerations and respect for the IP rights of creators.

As the debate unfolds, it’s clear that the path forward must involve a collaborative effort among developers, artists, and legal experts to redefine the boundaries of data use in AI. Only through a collective reevaluation of these ethical dimensions can we hope to foster an environment where innovation thrives without infringing upon the creative rights of individuals.